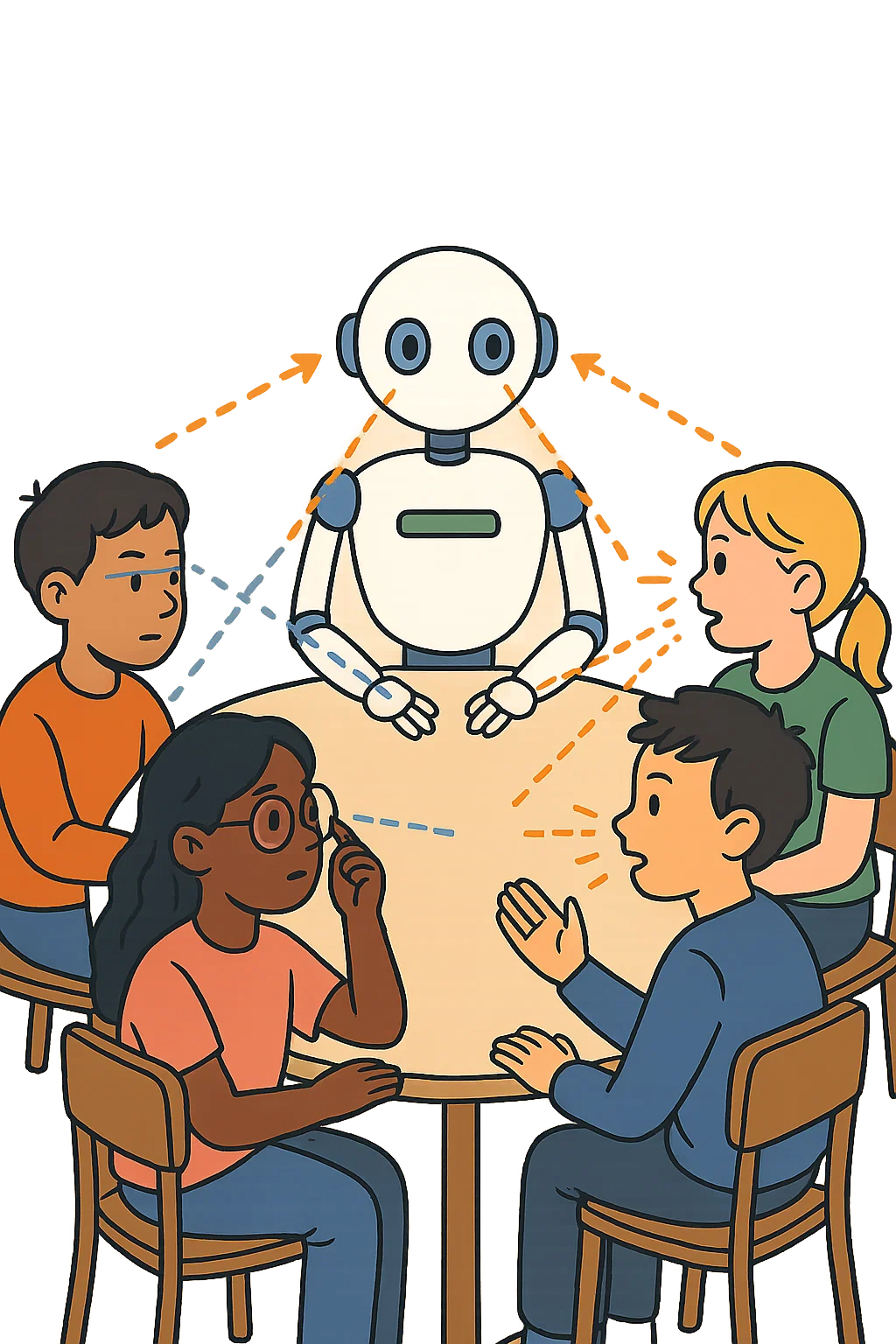

Participation detection and Robot Mediation for Inclusive Child Group Interactions

Real-time sensing and robot strategies to support equitable participation among mixed-ability children in group interactions (WIP).

Children with mixed abilities, such as those with visual or auditory impairments, often face challenges in fully participating in group interactions. This project, a collaboration between Carnegie Mellon University (USA) and Universidade de Lisboa (Portugal), seeks to address this issue by developing real-time multimodal group participation metrics and using these metrics to guide socially assistive robot behaviors that foster inclusion and equity in group dynamics.

Using synchronized cameras and microphones, we extract visual and auditory cues like gaze direction, head nods, speech turns, and interruptions. These are processed in real time using tools such as OpenFace, MediaPipe, etc., to generate composite metrics of group engagement.

These real-time metrics are used to inform different robot mediation strategies to promote equitable participation. The outcomes of this research are expected to support more inclusive educational, and social environments through intelligent, context-aware robotic systems.