Explainable AI Decision-Making in Human-AI Groups

A closed-loop machine teaching framework that uses explainable robot demonstrations and particle filters to model and adapt to individual and group beliefs, improving human understanding of robot decision-making in teams.

This research topic focuses on enhancing the transparency and efficacy of human-robot collaboration among human groups through explainable robot demonstrations. The goal is to help human collaborators understand how the robots make decisions generally for a task.

This work develops a closed-loop machine teaching framework for transparent human-robot collaboration in teams. By combining counterfactual reasoning, particle filter-based belief modeling, and pedagogical scaffolding, the system helps diverse human teammates understand and predict robot behavior, especially in time-constrained or resource-limited settings (Jayaraman et al., 2024).

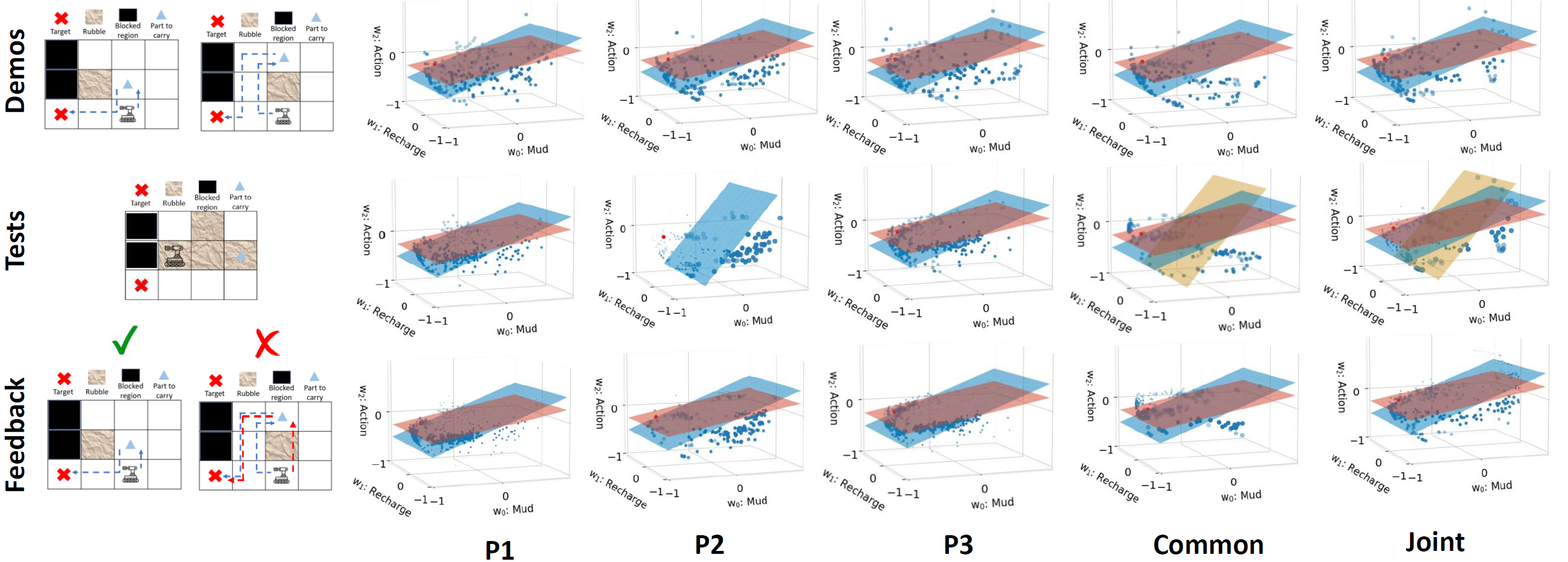

The approach dynamically models and updates individual and team beliefs about a robot’s decision-making policy—modeled via Inverse Reinforcement Learning (IRL) in an Markov Decision Process (MDP) framework by observing user test responses and robot demonstrations (Jayaraman et al., 2024). Demonstrations are selected based on information gain from simulated counterfactuals, with belief updates performed via custom Bayesian filters.

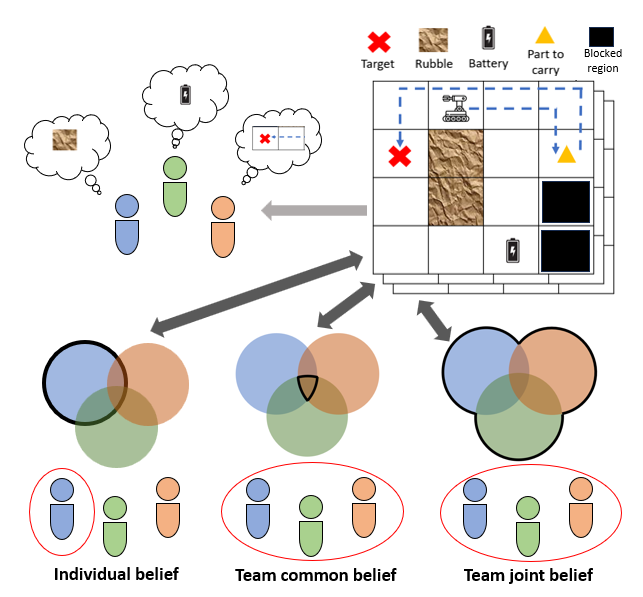

This illustration highlights the complexity of teaching human groups by modeling different belief states. Top-left shows three individuals with different beliefs about the robot’s decision-making. These beliefs are used to generate targeted or aggregated representations shown at the bottom: individual beliefs (distinct understanding per person), team common belief (intersection of all), and team joint belief (union of all). The robot uses these representations to adapt its explanations for improved understanding across the team.

A closed-loop teaching framework leverages insights from education literature to adaptively generate demonstrations based on individual and aggregated team beliefs. Human learners are provided with several lessons (scaffolding) associated with concepts with increasing complexity. Each lesson has demonstrations (examples) of robot behavior, check-in tests to evaluate their understanding of the underlying concept, and feedback on their performance in these tests.

The research explored how teaching strategies tailored to group or individual beliefs can significantly benefit different groups characterized by varying levels of learner capabilities. We found that group belief strategy to be advantageous for groups with mostly proficient learners, while individual strategies were better suited for groups with mostly naive learners. We validated these findings in simulated (Jayaraman et al., 2024) and empirical online studies (Jayaraman et al., 2025).

This research lays the groundwork for real-time adaptive explainable AI in multi-agent human-AI teams and is directly applicable to scenarios involving group trust calibration, dynamic policy explanation, and collective behavior modeling. It has direct implications for interactive AI systems, collaborative robotics, and autonomous decision support tools.

References

2025

- Explaining Robot Behavior to Groups: Machine Teaching for Transparent Decision-Making2025Manuscript in preparation

2024

- Understanding Robot Minds: Leveraging Machine Teaching for Transparent Human-Robot Collaboration Across Diverse GroupsIn 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024

- Modeling human learning of demonstration-based explanations for user-centric explainable AIIn Presented at the Explainability for Human-Robot Collaboration workshop at the ACM/IEEE International Conference on Human-Robot Interaction, 2024